Trends

Powerful Technologies Are Converging and They Are Accelerating Human Evolution

The Luddites were an occasionally violent group of 19th-century English textile workers who raged against the industrial machines that were beginning to replace human workers. The Luddites’ anxieties were certainly understandable, if—as history would eventually bear out—misguided. Rather than crippling the economy, the mechanization the Luddites feared actually improved the standard of living for most Brits.

New positions that took advantage of these rising technologies and the cheaper wares they produced (eventually) supplanted the jobs that were lost.

Fast-forward to today and “Luddite” has become a derogatory term used to describe anyone with an irrational fear or distrust of new technology. The so-called “Luddite fallacy” has become near-dogma among economists as a way to describe and dismiss the fear that new technologies will eat up all the jobs and leave nothing in their place.

So, perhaps the HR assistant who’s been displaced by state-of-the-art applicant tracking software or the cashier who got the boot in exchange for a self-checkout kiosk can take solace in the fact that the bomb that just blew up in their lives was just clearing the way for a new higher-skill job in their future. And why shouldn’t that be the case? This technology-employment paradigm has been validated by the past 200 or so years of history.

Yet some economists have openly pondered if the Luddite fallacy might have an expiration date. The concept only holds true when workers are able to retrain for jobs in other parts of the economy that are still in need of human labor.

So, in theory, there could very well come a time when technology becomes so pervasive and evolves so quickly that human workers are no longer able to adapt fast enough.

One of the earliest predictions of this personless workforce came courtesy of an English economist who famously observed (PDF),

“We are being afflicted with a new disease of which some readers may not yet have heard the name, but of which they will hear a great deal in the years to come— namely, technological unemployment.

This means unemployment due to our discovery of means of economizing the use of labor outrunning the pace at which we can find new uses for labor.”

That economist was John Maynard Keynes, and the excerpt was from his 1930 essay “Economic Possibilities for our Grandchildren.” Well, here we are some 90 years later (and had Keynes had any grandchildren they’d be well into retirement by now, if not moved on to that great job market in the sky), and the “disease” he spoke of never materialized. It might be tempting to say that Keynes’s prediction was flat-out wrong, but there is reason to believe that he was just really early.

Fears of technological unemployment have ebbed and flowed through the decades, but recent trends are spurring renewed debate as to whether we may—in the not-crazy-distant future—be innovating ourselves toward unprecedented economic upheaval. This past September in New York City, there was even a World Summit on Technological Unemployment that featured economic heavies like Robert Reich (Secretary of Labor during the Clinton administration), Larry Summers (Secretary of the Treasury, also under Clinton), and Nobel Prize–winning economist Joseph Stiglitz.

Why Might 2019 Be Much More Precarious Than 1930?

Today, particularly disruptive technologies like artificial intelligence, robotics, 3D printing, and nanotechnology are not only steadily advancing, but the data clearly shows that their rate of advancement is increasing (the most famous example of which being Moore’s Law’s near-flawless record of describing how computer processors grow exponentially brawnier with each generation).

Furthermore, as the technologies develop independently, they will hasten the development of other segments (for example, artificial intelligence might program 3D printers to create the next generation of robots, which in turn will build even better 3D printers).

It’s What Futurist and Inventor Ray Kurzweil Has Described As The Law of Accelerating Returns

Everything is Getting Faster—Faster

The evolution of recorded music illustrates this point. It’s transformed dramatically over the past century, but the majority of that change has occurred in just the past two decades. Analog discs were the most important medium for more than 60 years before they were supplanted by CDs and cassettes in the 1980s, only to be taken over two decades later by MP3s, which are now rapidly being replaced by streaming audio. This is the type of acceleration that permeates modernity.

“I believe we’re reaching an inflection point,” explains software entrepreneur and author of the book Rise of the Robots, Martin Ford (read the full interview here).

“Specifically in the way that machines—algorithms—are starting to pick up cognitive tasks. In a limited sense, they’re starting to think like people. It’s not like in agriculture, where machines were just displacing muscle power for mechanical activities. They’re starting to encroach on that fundamental capability that sets us apart as a species—the ability to think.”

“The second thing [that is different than the Industrial Revolution] is that information technology is so ubiquitous. It’s going to invade the entire economy, every employment sector. So there isn’t really a safe haven for workers. It’s really going to impact across the board. I think it’s going to make virtually every industry less labor-intensive.”

To what extent this fundamental shift will take place—and on what timescale—is still very much up for debate. Even if there isn’t the mass economic cataclysm some fear, many of today’s workers are completely unprepared for a world in which it’s not only the steel-driving John Henrys who find that machines can do their job better (and for far cheaper), but the Michael Scotts and Don Drapers, too. A white-collar job and a college degree no longer offer any protection from automation.

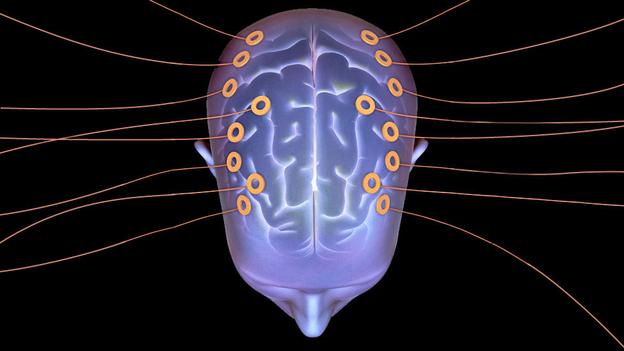

If I Only Had a Brain

There is one technology in particular that stands out as a disruption super-tsunami in waiting. Machine learning is a subfield of AI that makes it possible for computers to perform complex tasks for which they weren’t specifically programmed—indeed, for which they couldn’t be programmed—by enabling them to both gather information and utilize it in useful ways.

Machine learning is how Pandora knows what songs you’ll enjoy before you do. It’s how Alexa and other virtual assistants are able to adapt to the peculiarities of your voice commands. It even rules over global finances (high-frequency trading algorithms now account for more than three-quarters of all stock trades; one venture capital firm, Deep Knowledge Ventures, has gone so far as to appoint an algorithm to its board of directors).

Another notable example—and one that will itself displace thousands, if not millions, of human jobs—is the software used in self-driving cars. We may think of driving as a task involving a simple set of decisions (stop at a red light, make two lefts and a right to get to Bob’s house, don’t run over anybody), but the realities of the road demand that drivers make lots of decisions—far more than could ever be accounted for in a single program.

It would be difficult to write code that could handle, say, the wordless negotiation between two drivers who simultaneously arrive at a four-way-stop intersection, let alone the proper reaction to a family of deer galloping into heavy traffic. But machines are able to observe human behavior and use that data to approximate a proper response to a novel situation.

“People tried just imputing all the rules of the road, but that doesn’t work,” explains Pedro Domingos, professor of computer science at the University of Washington and author of The Master Algorithm. “Most of what you need to know about driving are things that we take for granted, like looking at the curve in a road you’ve never seen before and turning the wheel accordingly.

To us, this is just instinctive, but it’s difficult to teach a computer to do that. But [one] can learn by observing how people drive. A self-driving car is just a robot controlled by a bunch of algorithms with the accumulated experience of all the cars it has observed driving before—and that’s what makes up for a lack of common sense.”

Mass adoption of self-driving cars is still many years away, but by all accounts they are quite capable at what they do right now (though Google’s autonomous car apparently still has trouble discerning the difference between a deer and a plastic bag blowing in the wind). That’s truly amazing when you look at what computers were able to achieve only a decade ago.

With the prospect of accelerating evolution, we can only imagine what tasks they will be able to take on in another 10 years.

Is There a There There?

No one disagrees that technology will continue to achieve once-unthinkable feats, but the idea that mass technical unemployment is an inevitable result of these advancements remains controversial.

Many economists maintain an unshakable faith in The Market and its ability to provide jobs regardless of what robots and other assorted futuristic machines happen to be zooming around. There is, however, one part of the economy where technology has, beyond the shadow of any doubt, pushed humanity aside: manufacturing.

Between 1975 and 2011, manufacturing output in the U.S. more than doubled (and that’s despite NAFTA and the rise of globalization), while the number of (human) workers employed in manufacturing positions decreased by 31 percent.

This dehumanizing of manufacturing isn’t just a trend in America—or even rich Western nations—it’s a global phenomenon.

It found its way into China, too, where manufacturing output increased by 70 percent between 1996 and 2008 even as manufacturing employment declined by 25 percent over the same period.

There’s a general consensus among economists that our species’ decreasing relevance in manufacturing is directly attributable to technology’s ability to make more stuff with fewer people.

And what business wouldn’t trade an expensive, lunch-break-addicted human workforce for a fleet of never-call-out-sick machines?

Answer: All the ones driven into extinction by the businesses that did.

The $64 trillion question is whether this trend will be replicated in the services sector that more than two-thirds of U.S. employees now call their occupational home. And if it does, where will all those human workers move on to next?

“There’s no doubt that automation is already having an effect on the labor market,” says James Pethokoukis, a fellow with the libertarian-leaning American Enterprise Institute. “There’s been a lot of growth at high-end jobs, but we’ve lost a lot of middle-skill jobs—the kind where you can create a step-by-step description of what those jobs are, like bank tellers or secretaries or front-office people.”

It may be tempting to discount fears about technological unemployment when we see corporate profits routinely hitting record highs. Even the unemployment rate in the U.S. has fallen back to pre-economic-train-crash levels. But we should keep in mind that participation in the labor market remains mired at the lowest levels seen in four decades.

There are numerous contributing factors here (not the least of which is the retiring baby boomers), but some of it is surely due to people so discouraged with their prospects in today’s job market that they simply “peace out” altogether.

Another important plot development to consider is that even among those with jobs, the fruits of this increased productivity are not shared equally. Between 1973 and 2013, average U.S. worker productivity in all sectors increased an astounding 74.4 percent, while hourly compensation only increased 9.2 percent.

It’s hard not to conclude that human workers are simply less valuable than they once were.

So What Now, Humans?

Let’s embark on a thought experiment and assume that technological unemployment is absolutely happening and its destructive effects are seeping into every employment nook and economic cranny. (To reiterate: This is far from a consensus viewpoint.) How should society prepare? Perhaps we can find a way forward by looking to our past.

Nearly two centuries ago, as the nation entered the Industrial Revolution, it also engaged in a parallel revolution in education known as the Common School Movement. In response to the economic upheavals of the day, society began to promote the radical concept that all children should have access to a basic education regardless of their family’s wealth (or lack thereof).

Perhaps most important, students in these new “common schools” were taught standardized skills and adherence to routine, which helped them go on to become capable factory workers.

Adaptability: The Most Valuable Skill We Can Learn

“This time around we have the digital revolution, but we haven’t had a parallel revolution in our education system,” says economist and Education Evolution founder Lauren Paer.

“There’s a big rift between the modern economy and our education system. Students are being prepared for jobs in the wrong century. Adaptability will be the most valuable skill we can learn. We need to promote awareness of a landscape that is going to change quickly.”

In addition to helping students learn to adapt—in other words, learn to learn—Paer encourages schools to place more emphasis on cultivating the soft skills in which “humans have a natural competitive advantage over machines,” she says. “Things like asking questions, planning, creative problem solving, and empathy—those skills are very important for sales, it’s very important for marketing, not to mention in areas that are already exploding, like eldercare.”

One source of occupational hope lies in the fact that even as technology removes humanity from many positions, it can also help us retrain for new roles. Thanks to the Internet, there are certainly more ways to access information than ever before.

Furthermore (if not somewhat ironically), advancing technologies can open new opportunities by lowering the bar to positions that previously required years of training; people without medical degrees might be able to handle preliminary emergency room diagnoses with the aid of an AI-enabled device, for example.

So, perhaps we shouldn’t view these bots and bytes as interlopers out to take our jobs, but rather as tools that can help us do our jobs better. In fact, we may not have any other course of action—barring a global Amish-style rejection of progress, increasingly capable and sci-fabulous technologies are going to come online.

That’s a given; the workers who learn to embrace them will fare best.

“There will be a lot of jobs that won’t disappear, but they will change because of machine learning,” says Domingos. “I think what everyone needs to do is look at how they can take advantage of these technologies. Here’s an analogy: A human can’t win a race against a horse, but if you ride a horse, you’ll go a lot further.

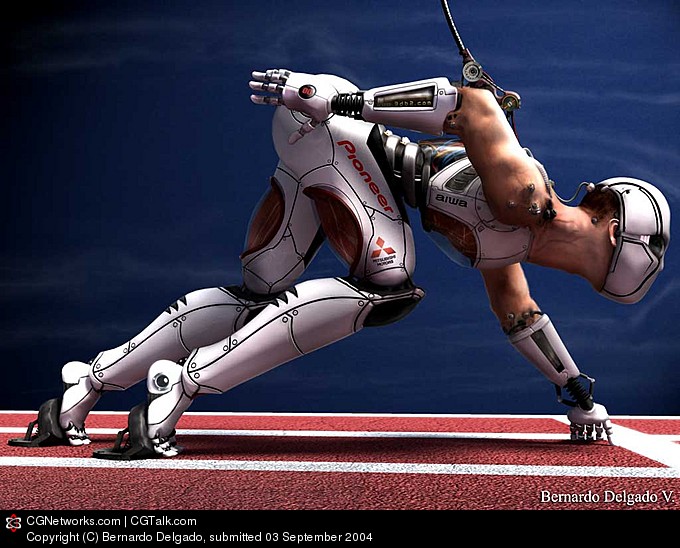

We all know that Deep Blue beat Kasparov and then computers became the best chess players in the world—but that’s actually not correct. The current world champions are what we call ‘centaurs,’ that’s a team of a human and a computer.

A human and a computer actually complement each other very well. And, as it turns out, human-computer teams beat all solely human or solely computer competitors. I think this is a good example of what’s going to happen in a lot of areas.”

Technologies such as machine learning can indeed help humans—at least those with the technical know-how—excel. Take the example of Cory Albertson, a “professional” fantasy sports better who has earned millions from daily gaming sites using hand-crafted algorithms to stake an advantage over human competitors whose strategies are often based on little more than what they gleaned from last night’s SportsCenter.

Also, consider the previously mentioned stock-trading algorithms that have enabled financial players to amass fortunes on the market. In the case of these so-called “algo-trading” scenarios, the algorithms do all the heavy lifting and rapid trading, but carbon-based humans are still in the background implementing the investment strategies.

Of course, even with the most robust educational reform and distributed technical expertise, accelerating change will probably push a substantial portion of the workforce to the sidelines. There are only so many people who will be able to use coding magic to their benefit. And that type of disparity can only turn out badly.

Democratic Socialism?

One possible solution many economists have proposed is some form of universal basic income (UBI), aka just giving people money. As you might expect, this concept has the backing of many on the political left, but it’s also had notable supporters on the right (libertarian economic rock star Friedrich Hayek famously endorsed the concept).

Still, many in the U.S. are positively allergic to anything with even the faintest aroma of “socialism.”

“It’s really not socialism—quite the opposite,” comments Ford, who supports the idea of a UBI at some point down the road to counter the inability of large swaths of society to earn a living the way they do today. “Socialism is about having the government take over the economy, owning the means of production, and—most importantly—allocating resources…. And that’s actually the opposite of a guaranteed income.

The idea is that you give people enough money to survive on and then they go out and participate in the market just as they would if they were getting that money from a job. It’s actually a free market alternative to a safety net.”

Related: You Want A Basic Income? Here’s How We Might Actually Do It

The exact shape of a Homo sapiens safety net depends on whom you ask. Paer endorses a guaranteed jobs program, possibly in conjunction with some form of UBI.

While “the conservative version would be through something like a negative income tax,” according to Pethokoukis. “If you’re making $15 per hour and we as a society think you should be making $20 per hour, then we close the gap. We would cut you a check for $5 per hour.”

In addition to maintaining workers’ livelihoods, the very nature of work might need to be re-evaluated. Alphabet CEO Larry Page has suggested the implementation of a four-day workweek in order to allow more people to find employment.

This type of shift isn’t so pie-in-the-sky when you consider that, in the late 19th century, the average American worker logged nearly 75 hours per week, but the workweek evolved in response to new political, economic, and technological forces. There’s no real reason that another shift of this magnitude couldn’t (or wouldn’t) happen again.

If policies like these seem completely unattainable in America’s current gridlock-choked political atmosphere, that’s because they most certainly are. If mass technological unemployment does begin to manifest itself as some anticipate, however, it will bring about a radical new economic reality that would demand a radical new political response.

Toward the Star Trek Economy

Nobody knows what the future holds. But that doesn’t mean it isn’t fun to play the “what if” game. What if no one can find a job? What if everything comes under control of a few trillionaires and their robot armies?

And, most interesting of all: What if we’re asking the wrong questions altogether?

What if, after a tumultuous transition period, the economy evolves beyond anything we would recognize today? If technology continues on its current trajectory, it inevitably leads to a world of abundance. In this new civilization 2.0, machines will conceivably be able to answer just about any question and make just about everything available.

So, what does that mean for us lowly humans?

“I think we’re heading towards a world where people will be empowered to spend their time doing what they enjoy doing, rather than what they need to be doing,” Planetary Ventures CEO, X-Prize cofounder, and devoted techno-optimist Peter Diamandis told me when I interviewed him last year.

“There was a Gallup Poll that said something like 70 percent of people in the United States don’t enjoy their job—they work to put food on the table and get health insurance to survive.

So, what happens when technology can do all that work for us and allow us to actually do what we enjoy with our time?”

It’s easy to imagine a not-so-distant future where automation takes over all the dangerous and boring jobs that humans do now only because they have to. Surely there are drudging elements of your workday that you wouldn’t mind outsourcing to a machine so you could spend more time with the parts of your job that you do care about.

SoftBank’s Pepper Robots to Staff Tokyo Cell Phone Store

One glass-half-full vision could look something like the galaxy portrayed in Star Trek: The Next Generation, where abundant food replicators and a post-money economy replaced the need to do… well, anything. Anyone in Starfleet could have chosen to spend all their time playing 24th-century video games without the fear of starvation or homelessness, but they decided a better use of their time would be spent exploring the unknown.

Captain Picard and the crew of the USS Enterprise didn’t work because they feared what would happen if they didn’t—they worked because they wanted to.

Nothing is inevitable, of course. A thousand things could divert us from this path. But if we ever do reach a post-scarcity world, then humanity will be compelled to undergo a radical reevaluation of its values. And maybe that’s not the worst thing that could happen to us.

Perhaps we shouldn’t fear the idea that all the jobs are disappearing. Perhaps we should celebrate the hope that nobody will have to work again.

[soundcloud url=”https://api.soundcloud.com/tracks/189765413″ params=”auto_play=false&hide_related=false&show_comments=false&show_user=true&show_reposts=false&visual=true” width=”100%” height=”65″ iframe=”true” /]

China is Targeting a Combination of Mobile Internet, Cloud Computing, Big Data and the Internet of Things

[slideshare id=47186043&doc=industrialinternetbigdatachinamarketstudy-150420042000-conversion-gate02]

Since early this year, China has been pursuing an “Internet Plus” action plan focusing especially on cloud computing. Early this year, the State Council unveiled an opinion about promoting cloud computing, expecting China to have built up several internationally competitive cloud computing enterprises that have controlled key cloud computing technologies by 2020, China Economic Net reports.

Premier Li Keqiang previously said “Internet Plus” entails integration of mobile internet, cloud computing, big data and Internet of Things with modern manufacturing in order to foster new industries and business development, including e-commerce, industrial internet and internet finance.

In the first quarter of 2015, the infrastructure of global cloud computing grew 25.1% hitting US$6.3 billion.

According to the action plan, China will push forward the integration ofthe Internet and traditional industries, fuel its expansion from consumption industries to manufacturing.

The action plan maps development targets and supportive measures for key sectors which the government hopes can establish new industrial modes by integrating with Internet, including mass entrepreneurship and innovation, manufacturing, agriculture, energy, finance, public services, logistics, e-commerce, traffic, biology and artificial intelligence.

“The government aims to further deepen the integration of the Internet with the economic and social sectors, making new industrial modes a main driving force of growth,” according to the action plan.

By 2025, Internet Plus will become a new economic model and an important driving force for economic and social innovation and development.

China’s Internet plus plan is similar to GE’s Industrial Internet vision.

[soundcloud url=”https://api.soundcloud.com/tracks/73102531″ params=”auto_play=false&hide_related=false&show_comments=false&show_user=false&show_reposts=false&visual=true” width=”100%” height=”60″ iframe=”true” /]

Robert Reich: The Wealthy Have Broken Society by Siphoning All Its Money to Themselves

By Scott Eric Kaufman

They already “undertook the upward redistribution by altering the rules of the game”

Robert Reich, the Secretary of Labor under Bill Clinton, published an essay in the most recent American Prospect in which he argues that the wealth inequality isn’t a problem because of current economic policy, but because the financial elite starting rigging the game against the middle- and lower-class half a century ago.

Debates between those on the left and the right about the merits of government intervention versus the free market distract people from the real problem, Reich said, which is how different the market is than it was 50 years ago.

“Its current organization is failing to deliver the widely shared prosperity it delivered then,” he explains, because it’s no longer designed to reward hard work. Everything from intellectual property rights to basic contract laws has been altered at the behest of corporate interests, creating a system in which — if functioning properly — money is siphoned from the bottom up.

The entry-level wages of female college graduates have dropped by more than 8 percent, and male graduates by more than 6.5 percent. While a college education has become a prerequisite for joining the middle class, it is no longer a sure means for gaining ground once admitted to it. That’s largely because the middle class’s share of the total economic pie continues to shrink, while the share going to the top continues to grow.

A deeper understanding of what has happened to American incomes over the last 25 years requires an examination of changes in the organization of the market. These changes stem from a dramatic increase in the political power of large corporations and Wall Street to change the rules of the market in ways that have enhanced their profitability, while reducing the share of economic gains going to the majority of Americans.

This transformation has amounted to a redistribution upward, but not as “redistribution” is normally defined.

The government did not tax the middle class and poor and transfer a portion of their incomes to the rich.

The government undertook the upward redistribution by altering the rules of the game.

Read the rest at the American Prospect…

Gartner Predicts Top Trends For Technology

By Gil Press

Gartner revealed its predictions for top technology trends, the impact of technology on businesses and consumers, and the continuing evolution of the IT organization and the role of the CIO. Here is my summary of Gartner’s press releases:

Digital Disruption Will Give Rise to New Businesses, Some Created by Machines

Gartner says a significant disruptive digital business will be launched that was conceived by a computer algorithm. The most successful startups will mesh the digital world with existing physical logistics to create a consumer-driven network—think Airbnb and Uber—and challenge established markets consisting of isolated physical units.

The meshing of the digital with the physical will impact how we think about product development. In 2015, more than half of traditional consumer products had native digital extensions and, 50% of consumer product investments will be redirected to customer experience innovations. The wide availability of product, pricing and customer satisfaction information has eroded the competitive advantage afforded in the past by product innovation and is shifting attention to customer experience innovation as the key to a lasting brand loyalty.

The meshing of the digital with the physical also means the rise of the Internet of Things. “This year,” says Gartner, “enterprises will spend over $40 billion designing, implementing and operating the Internet of Things.” That’s still a tiny slice of worldwide spending on IT which surpassed $3.9 trillion in 2015, a 3.9% increase from 2014.

But much of this spending will be driven by the digital economy and the Internet of Things is in the driver seat. Gartner defines digital business as new business designs that blend the virtual world and the physical worlds, changing how processes and industries work through the Internet of Things.

Consider this: Since 2013, 650 million new physical objects have come online; 3D printers became a billion dollar market; 10% of automobiles became connected; and the number of Chief Data Officers and Chief Digital Officer positions have doubled.

But fasten your seat belts: All of these things are predicted to continue doubling again each year for the foreseeable future.

Managing and Leading the Digital Business, Mostly by Humans

Gartner’s survey of 2,800 CIOs in 84 countries showed that CIOs are fully aware that they will need to change in order to succeed in the digital business, with 75% of IT executives saying that they need to change their leadership style in the next three years.

“The exciting news for CIOs,” says Gartner, “is that despite the rise of roles, such as the chief digital officer, they are not doomed to be an observer of the digital revolution.” According the survey, 41% of CIOs are reporting to their CEO. Gartner notes that this is a return to one of the highest levels it has ever been, no doubt because of the increasing importance of information technology to all businesses.

Still, reporting to the CEO does not necessarily mean leading the digital initiatives of the business. Reports from the Symposium highlighted another Gartner finding: While CIOs say they are driving 47% of digital leadership only 15% of CEOs agree that they do so.

Similarly, while CIOs estimate that 79% of IT spending will be “inside” the IT budget (up slightly from last year), Gartner says that over 50% of total IT spending is outside of IT already. This is a “shift of demand and control away from IT and toward digital business units closer to the customer,” says Gartner. It further estimates that 50% of all technology sales people are actively selling direct to business units, not IT departments.

Gartner sees the established behaviors and beliefs of the IT organization, the “best practices” that have served it well in previous years, as the biggest obstacle for CIOs in their pursuit of digital leadership.

Process management and control are not as important as Vision and Agility. Compounding the problem of obsolete leadership style and inadequate skills, is a fundamental requirement of the digital business:

It must be unstable. In 2017, 70% of successful digital business models relied on deliberately unstable processes designed to shift as customer needs shift. “This holistic approach,” says Gartner, “blending business model, processes, technology and people will fuel digital business success.”

The Rise of Smart Machines

There will be more than 40 vendors with commercially available managed services offerings leveraging smart machines and industrialized services. In 2018, the total cost of ownership for business operations will be reduced by 30% through smart machines and industrialized services.

Smart machines are an emerging “super class” of technologies that perform a wide variety of work, of both the physical and the intellectual kind. Smart machines will automate decision making. Therefore, they will not only affect jobs based on physical labor, but they will also impact jobs based on complex knowledge worker tasks.

“Smart machines,” says Gartner, “will not replace humans as people still need to steer the ship and are critical to interpreting digital outcomes.” But these humans will have new types of jobs.

Top Digital Jobs

In 2018, Gartner says, digital business requires 50% less business process workers and 500% more key digital business jobs, compared with traditional models.

The top jobs for digital over the next seven years will be:

• Integration Specialists

• Digital Business Architects

• Regulatory Analysts

• Risk Professionals

Gartner: “You must build talent for the digital organization of 2020 now. Not just the digital technology organization, but the whole enterprise. Talent is the key to digital leadership.”

Where Things Can Go Wrong

Gartner highlights two areas of potential vulnerabilities as business pursue digital opportunities: Lack of portfolio management skills and inadequate risk management.

By year-end 2016, 50% of digital transformation initiatives was unmanageable due to lack of portfolio management skills, leading to a measurable negative lost market share. The digital business brings with it vastly different and higher levels of risk, say 89% of CIOs and 69% believe that the discipline of risk management is not keeping up.

Gartner: “CIOs need to review with the enterprise and IT risk leaders whether risk management is adapting fast enough to a digital world.” This is also an urgent tasks, I might add, for CEOs and the board of directors.

The Pursuit of Longer Life and Increased Happiness

Gartner predicts that all these connected devices will have a positive impact on our health. The use of smartphones will reduce by 10% the costs for diabetic care. By 2020, life expectancy in the developed world will increase by 0.5 years due to widespread adoption of wireless health monitoring technology.

The retail industry could be the industry most impacted by the digital tsunami, drastically altering our shopping experience. Mobile digital assistants will have taken on tactical mundane processes such as filling out names, addresses and credit card information.

More than $2 billion in online shopping was performed exclusively by mobile digital assistants in 2015. Yearly autonomous mobile assistant purchasing will reach $2 billion dollars annually, representing about 2.5 percent of mobile users trusting assistants with $50 a year.

U.S. customers’ mobile engagement behavior will drive mobile commerce revenue in the U.S. to 50% of U.S. digital commerce revenue. A renewed interest in mobile payments is rising, together with a significant increase in mobile commerce. There will be an increase in the number of offers from retailers focused on customer location and the length of time in store. By 2020, retail businesses that utilize targeted messaging in combination with internal positioning systems (IPS) will see a five percent increase in sales.

These Trends Seem to be a Logical Extension of Current Technologies

But 3D printing will bring us a completely new shopping experience and will expand widely what’s on offer. In 2015, more than 90% of online retailers of durable goods were actively seeking external partnerships to support the new “personalized” product business models and in 2017, nearly 20% of these retailers used 3D printing to create personalized product offerings.

Gartner: “The companies that set the strategy early will end up defining the space within their categories.”

This statement, which Gartner made specifically about the personalized products business, is true for all types of digital businesses and for all the new management processes and attitudes that all organizations should put in place, sooner rather than later.

Gartner Says Digital Business Economy is Resulting in Every Business Unit Becoming a Technology Startup

Nobel Laureates Call for a Revolutionary Shift in How Humans Use Resources

Eleven holders of prestigious prize say excessive consumption threatening planet, and humans need to live more sustainably

Deforestation is among a growing list of planetary ailments, the Nobel laureates warn. Photograph: Sipa Press/Rex Features

Deforestation is among a growing list of planetary ailments, the Nobel laureates warn. Photograph: Sipa Press/Rex Features

Eleven Nobel laureates pooled their clout to sound a warning, declaring that mankind is living beyond its means and darkening its future.

At a conference in Hong Kong coinciding with the annual Nobel awards season, holders of the prestigious prize plead for a revolution in how humans live, work and travel. Only by switching to smarter, less greedy use of resources can humans avert wrecking the ecosystems on which they depend, the laureates will argue.

The state of affairs is “catastrophic”, Peter Doherty, 1996 co-winner of the Nobel prize for medicine, said in a blunt appraisal. He is among 11 laureates scheduled to attend the four-day huddle from Wednesday – the fourth in a series of Nobel symposia on the precarious state of the planet.

From global warming, deforestation and soil and water degradation to ocean acidification, chemical pollution and environmentally-triggered diseases, the list of planetary ailments is long and growing, Doherty said. The worsening crisis means consumers, businesses and policymakers must consider the impact on the planet of every decision they make, he said.

“We need to think sustainability – food sustainability, water sustainability, soil sustainability, sustainability of the atmosphere.”

Overlapping with the 2014 Nobel prize announcements from 6-13 October, the laureates’ gathering will focus on the prospect that global warming could reach double the UN’s targeted ceiling of 2C over pre-industrial times. Underpinning their concern are new figures highlighting that humanity is living absurdly beyond its means.

According to the latest analysis by environmental organisation WWF, mankind is using 50% more resources than nature can replenish. “The peril seems imminent,” said US-Australian astrophysicist Brian Schmidt, co-holder of the 2011 Nobel physics prize for demonstrating an acceleration in the expansion of the universe.

The threat derives from “our exponentially growing consumption of resources, required to serve the nine billion or so people who will be on planet Earth by 2050, all of whom want to have lives like we have in the western world,” said Schmidt. “We are poised to do more damage to the Earth in the next 35 years than we have done in the last 1,000.”

Ada Yonath, an Israeli crystallographer co-awarded the 2009 Nobel for chemistry, said sustainability should not just be seen as conservation of animals and plants. Humankind should also be much more careful in its use of other life-giving resources like antibiotics. The spread of drug-resistant bacteria through incorrect use has become a key challenge in “sustainability for the future of humankind”, she stressed.

Several of the Laureates Suggested a Focus on Energy

Dirty fossil fuels must be quickly phased out in favour of cleaner sources – and, just as importantly, the new technology has to spread quickly in emerging economies. If these countries fail to adopt clean alternatives, they will continue to depend on cheap, plentiful fossil fuels to power their rise out of poverty. “This will lead to major climate change in the future, and might well destabilize a large fraction of the world’s population due to the change of [climate] conditions,” warned Schmidt.

The climate impact of Asia’s rapid urbanization will be one of the meeting’s focus areas. Another concern aired by laureates was the need to strip away blinders about the danger, while remaining patient in explaining to people why change would be to their advantage.

George Smoot, co-awarded the 2006 physics prize for his insights into the big bang that created the universe, gave the example of LED lighting, a low-carbon substitute for inefficient incandescent bulbs. “A great innovation is not enough,” he said. “It must be adopted and used widely to have major impact and that starts with general understanding. But until people move from old incandescent bulbs to the new ones, the impact is much less. “So we need the solutions, for authorities to authorize or encourage their use through regulation, and for people to adopt them.” And that could only work once everyone understands the benefits for humanity as a whole, but also for themselves, said Smoot.

The Next 20 Years Are Going to Make the Last 20 Look Like We Accomplished Nothing in Tech

By ALYSON SHONTELL

Kevin Kelly

The world is hitting its stride in technological advances, and futurists have been making wild-sounding bets on what we’ll accomplish in the not-so-distant future.

Futurist Ray Kurzweil, for example, believes that by 2040 artificial intelligence will be so good that humans will be fully immersed in virtual reality and that something called the Singularity, when technology becomes so advanced that it changes the human race irreversibly, will occur.

Kevin Kelly, who helped launch Wired in 1993, sat down for an hour-long video interview with John Brockman at Edge. Kelly believes the next 20 years in technology will be radical. So much so that he believes our technological advances will make the previous 20 years “pale” in comparison.

“If we were sent back with a time machine, even 20 years, and reported to people what we have right now and describe what we were going to get in this device in our pocket — we’d have this free encyclopedia, and we’d have street maps to most of the cities of the world, and we’d have box scores in real time and stock quotes and weather reports, PDFs for every manual in the world … You would simply be declared insane,” Kelly said.

“But the next 20 years are going to make this last 20 years just pale,” he continued. “We’re just at the beginning of the beginning of all these kind of changes. There’s a sense that all the big things have happened, but relatively speaking, nothing big has happened yet. In 20 years from now we’ll look back and say, ‘Well, nothing really happened in the last 20 years.'”

In 20 years from now we’ll look back and say, ‘Well, nothing really happened in the last 20 years.’

What will these mind-blowing changes look like? He mentioned a few thoughts during the interview with Brockman.

Robots Are Going to Make Lots of Things

“Certainly most of the things that are going to be produced are going to be made by robots and automation, but [humans] can modify them and we can change them, and we can be involved in the co-production of them to a degree that we couldn’t in the industrial age,” Kelly says.

“That’s sort of the promise of 3D printing and robotics and all these other high-tech material sciences is that it’s going to become as malleable.”

Tracking and Surveillance Are only Going to get More Prevalent

But they may move toward “coveillance” so that we can control who’s monitoring us and what they’re monitoring.

“It’s going to be very, very difficult to prevent this thing that we’re on all the time 24 hours, seven days a week, from tracking, because all the technologies — from sensors to quantification, digitization, communication, wireless connection — want to track, and so the internet is going to track,” says Kelly.

“We’re going to track ourselves. We’re going to track each other. Government and corporations are going to track us. We can’t really get out of that. What we can try and do is civilize and make a convivial kind of tracking.”

Kelly says the solution may be to let people see who’s tracking them, what they’re tracking, and give them the ability to correct trackings that are inaccurate. Right now, people just feel like they’re being spied on, and they can’t control who’s watching them or what information is being surfaced.

Everything Really Will be About ‘Big Data’

Kelly admits that big data is a buzzword, but he thinks it deserves to be.

“We’re in the period now where the huge dimensions of data and their variables in real time needed for capturing, moving, processing, enhancing, managing, and rearranging it, are becoming the fundamental elements for making wealth,” says Kelly.

“We used to rearrange atoms, now it’s all about rearranging data. That is really what we’ll see in the next 10 years … They’re going to release data from language to make it machine-readable and recombine it in an infinite number of ways that we’re not even thinking about.”

Asking the Right Questions Will Become More Valuable than Finding Answers

In the age of Google and Wikipedia, answers to endless questions are free. Kelly believes that asking good questions will become much more important in the future than finding one-off solutions.

“Every time we use science to try to answer a question, to give us some insight, invariably that insight or answer provokes two or three other new questions,” he says. “While science is certainly increasing knowledge, it’s actually increasing our ignorance even faster.”

“In a certain sense what becomes really valuable in a world running under Google’s reign are great questions, and that means that for a long time humans will be better at than machines. Machines are for answers. Humans are for questions.”